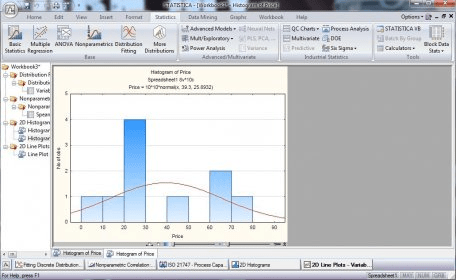

Calculate and graph residuals in four different ways (including QQ plot).Test for departure from linearity with a runs test.Force the regression line through a specified point.Calculate slope and intercept with confidence intervals.Analyze a stack of P values, using Bonferroni multiple comparisons or the FDR approach to identify "significant" findings or discoveries.Identify outliers using Grubbs or ROUT method.One sample t test or Wilcoxon test to compare the column mean (or median) with a theoretical value.Create QQ Plot as part of normality testing.Lognormality test and likelihood of sampling from normal (Gaussian) vs.Normality testing by four methods (new: Anderson-Darling).Frequency distributions (bin to histogram), including cumulative histograms.Mean or geometric mean with confidence intervals.Calculate descriptive statistics: min, max, quartiles, mean, SD, SEM, CI, CV, skewness, kurtosis.Specify variables defining axis coordinates, color, and size.Use results in downstream applications like Principal Component Regression.Automatically generated Scree Plots, Loading Plots, Biplots, and more.Component selection via Parallel Analysis (Monte Carlo simulation), Kaiser criterion (Eigenvalue threshold), Proportion of Variance threshold, and more.Fit straight lines to two data sets and determine the intersection point and both slopes.Easily interpolate points from the best fit curve.Report the covariance matrix or set of dependencies.Runs or replicates test of adequacy of model.Quantify symmetry of imprecision with Hougaard’s skewness.Confidence intervals can be symmetrical (as is traditional) or asymmetrical (which is more accurate). Quantify precision of fits with SE or CI of parameters.Automatically graph curve over specified range of X values.Accept automatic initial estimated values or enter your own.Differentially weight points by several methods and assess how well your weighting method worked.Compare models using extra sum-of-squares F test or AICc.Automatic outlier identification or elimination.Global nonlinear regression – share parameters between data sets.Enter different equations for different data sets.Enter differential or implicit equations.Now including family of growth equations: exponential growth, exponential plateau, Gompertz, logistic, and beta (growth and then decay).

Three-way ANOVA (limited to two levels in two of the factors, and any number of levels in the third).Tukey, Newman-Keuls, Dunnett, Bonferroni, Holm-Sidak, or Fisher’s LSD multiple comparisons testing main and simple effects. Two-way ANOVA, with repeated measures in one or both factors.Two-way ANOVA, even with missing values with some post tests.Calculate the relative risk and odds ratio with confidence intervals. Fisher's exact test or the chi-square test.Kruskal-Wallis or Friedman nonparametric one-way ANOVA with Dunn's post test.When this is chosen, multiple comparison tests also do not assume sphericity. Greenhouse-Geisser correction so repeated measures one-, two-, and three-way ANOVA do not have to assume sphericity.Many multiple comparisons test are accompanied by confidence intervals and multiplicity adjusted P values.One-way ANOVA without assuming populations with equal standard deviations using Brown-Forsythe and Welch ANOVA, followed by appropriate comparisons tests (Games-Howell, Tamhane T2, Dunnett T3).

0 kommentar(er)

0 kommentar(er)